Presented at the 24th International Exhibition "Inequalities" at the Milan Triennale,Not For Her. AI Revealing the Unseen is an installation by the Milan Polytechnic conceived by Rector Donatella Sciuto together with the research team led by Nicola Gatti, Ingrid Paoletti and Matteo Ruta with the artistic direction of Umberto Tolino and Ilaria Bollati.

The work is a work of art by the Politecnico di Milano.

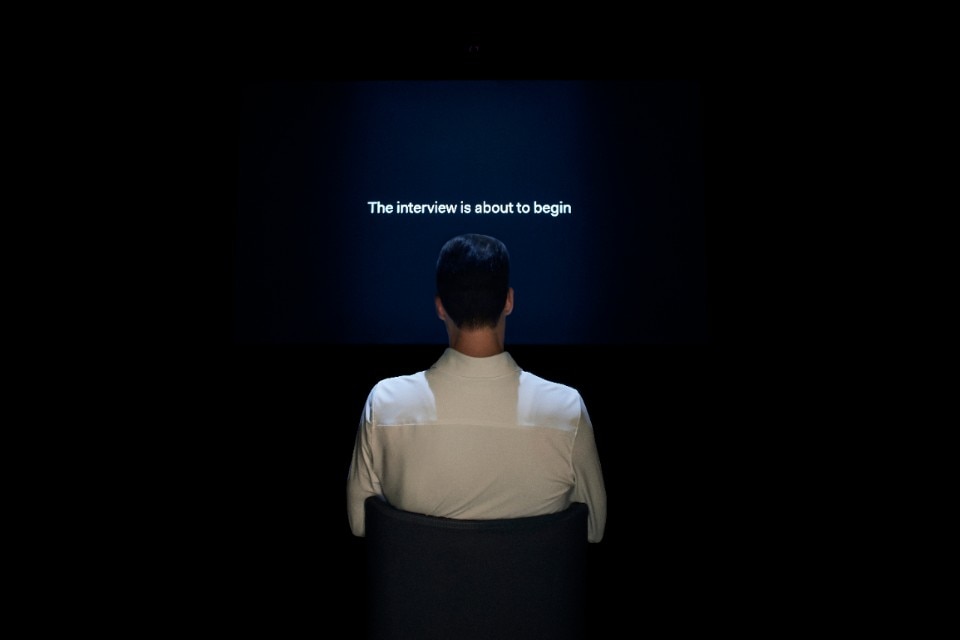

The work transforms the job interview format into an immersive experience that focuses, through a AI generative, on gender discrimination still entrenched in selection processes. Those who lend themselves to the game might hear themselves asking things like, "How do you think you would handle the job if you were to have a child?" and other discriminatory questions, thanks to a work that doesn't unmask AI biases, but sends human ones back to sender.

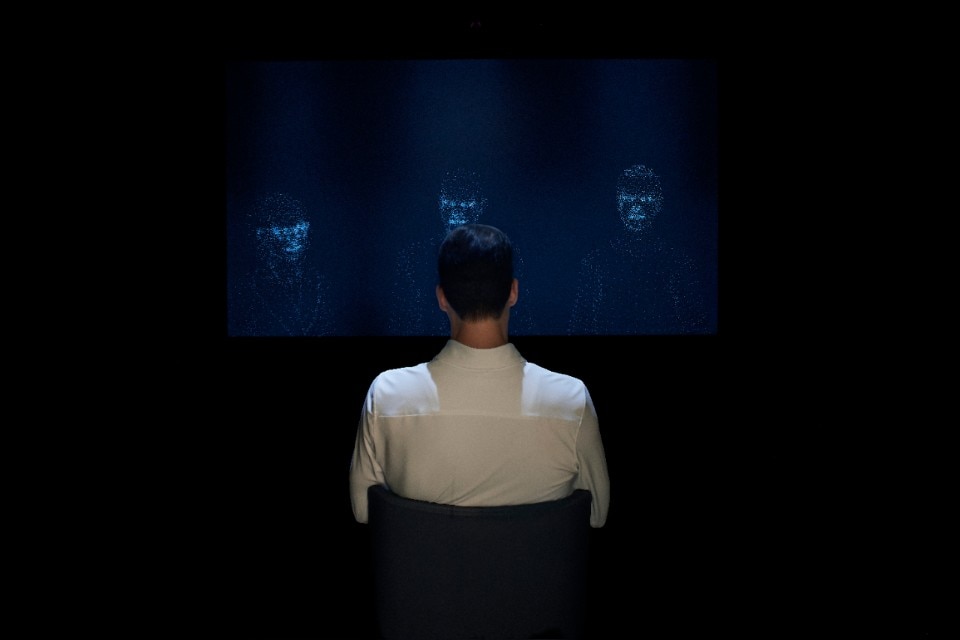

The heart of the project is a Large Language Model (a version of ChatGPT) with Retrieval-Augmented Generation: to user requests, the system adds, in real time, text fragments from HR documents, regulations, and discrimination testimony. "From a technical point of view, the system aggregates various tools already available with algorithms that we have researched and implemented in a dedicated way," explains Rector Donatella Sciuto, "this is because current language models are not able to independently conduct a complex conversation directed to a specific goal." Each fork in the road identifies a specific form of bias-assumed motherhood, sexual objectification, age, family care-and directs subsequent questions. "The discussion can take endless folds and directions that unfold along a multiplicity of stories, all of them, however, with a single purpose that is to make the visitor experience discrimination. Each is the result of the individual respondent's answers."

Discrimination starts with us. Widening or reducing inequality is the result of our choices. New skills are needed, even before new systems. We need knowledgeable professionals, not mere users.

Donatella Sciuto

Dialogue is not based on words alone. Voice recognition, facial analysis and postural tracking extract timbre, pauses, micro-expressions and head tilt; those signals are incorporated into the prompts sent to the LLM and modulate tone of voice and gestures of the avatar. "We use a decision tree that follows, step by step, the visitor based on his or her responses. It is a predictive model, where each node represents a variable, a "plot twist," as they would say in the jargon. The path defines the specific type of discrimination that the visitor will go through," the rector points out. The result is a plastic conversation, capable of changing register with ease.

The trajectories are finite, the dialogues potentially infinite. If the AI detects that the user is a first-time woman, it can prod, "Are you prepared to stay past 8 p.m. if the children get sick?" If the candidate says she does not want children, the bias slips to a more intimate level: "Does she have a partner willing to accept her ambitions?" "I like to use the screenwriter's metaphor," Sciuto clarifies, "we provided information so that the system could assemble a potentially infinite number of dialogues."

To avoid caricatures, the team worked with professional recruiters and people who shared real incidents of sexism. The transcripts, anonymized, end up in the Rag engine as living material. "Authenticity is the basis of our credibility," the dean emphasizes. Three weeks of full-time testing in sessions of ten minutes each calibrated tone, vocabulary and pacing, eliminating crude stereotypes and preserving the harshness of the real.

Stupor and anger are the most frequent emotions in the audience. "The first reaction is amazement at the naturalness of the interaction," Sciuto says; "the second is anger: the system makes the user feel very uncomfortable." Some argue with the avatar; others remain silent, bewildered. If the respondent talks too much, the algorithm interrupts him; if he appears compliant, the avatar may go so far as to ambiguously invite him to dinner. "The system manages to make the user very uncomfortable, and this triggers feelings such as irritation and disappointment. There have been visitors who have engaged in actual arguments, others who have "suffered," subdued. I myself have had several test sessions and tried to behave differently. The emotions I experienced are human. However, we can learn to manage them. This tool can train us to do so."

The Polytechnic is considering a traveling version for Open Days and seminars on Stem careers where the gender gap is still pronounced. "One idea," says Sciuto, "could be to make it available during orientation events, for the benefit of girls who envision academic and working careers in Stem subjects. Discrimination is still strong here. Some companies have then shown interest not only in the hiring perspective, but in the broader dimension of inclusion." An online version, however, is ruled out: it would require computing infrastructure now out of reach even for large universities.

Shifting our gaze from the biases "in" the machine to those "in" society implies new skills, not just new algorithms. "What is important to understand and remember is that the machine has to be calibrated based on specific needs," Sciuto cautions, "these have to be judged with extreme rationale by human beings. Discrimination starts with us. Widening or narrowing inequality is the result of our choices. New skills are needed, even before new systems. We need knowledgeable professionals, not mere users."

Videos by Variante Artistica

We need more Stem graduates-and more women in technical pathways-along with clear regulations, continuous assessment, and controlled datasets. Without these garrisons, technology risks amplifying, rather than reducing, inequalities.

Not for her upends the usual narrative: an AI programmed to discriminate for the purpose of reporting discrimination. In the paradox of the digital mirror, the avatar shows that the frontier of equity is not played out within language models, but in the choices, cultural before technical, of those who train them.

- Show:

- "Not For Her. AI Revealing the Unseen."

- Edited by:

- conceived by Chancellor Donatella Sciuto together with the research team led by Nicola Gatti, Ingrid Paoletti and Matteo Ruta with the artistic direction of Umberto Tolino and Ilaria Bollati

- Where:

- Milan Triennale, 24th International Exhibition - Inequalities

- Dates:

- May 13-November 9, 2025

- Videos:

- Variante Artistica

Opening image: View of the installation "Not For Her. AI Revealing the Unseen," Milan Triennale, 24th International Exhibition - Inequalities. Photo Luca Trelancia