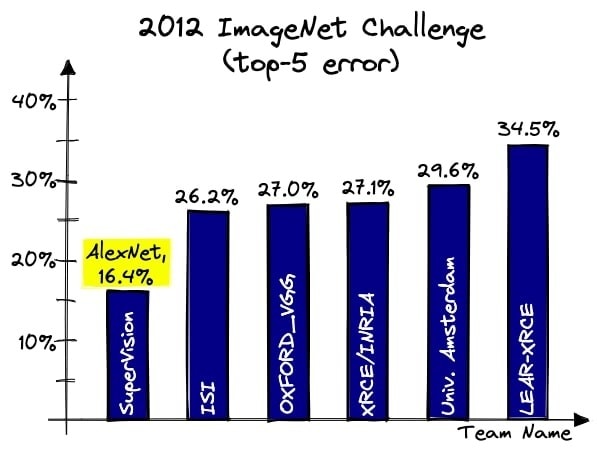

In 2012, the ImageNet Challenge entered its third year. Officially known as the ImageNet Large Scale Visual Recognition Challenge, it had been launched in 2010 by Stanford professor Fei-Fei Li. Its goal was to give the best image recognition software – systems capable of identifying objects or living beings in photographs – a platform to compete. The competition rewarded the most accurate algorithms for analyzing images from a vast database.

That year, the computer vision algorithms dominating the challenge – based on a technology called support vector machines (SVMs) – were completely outperformed by a neural network named AlexNet. This network achieved an accuracy rate of 85%, far surpassing the previous record of 74%.

So, what caused this dramatic leap? AlexNet was a deep neural network powered by machine learning, an architecture inspired by the human brain, designed to learn autonomously from data. To teach it to recognize, say, cats, the programmer doesn’t tell it what a cat is. Instead, the system is fed hundreds of thousands of images of cats (along with images of other animals), gradually learning on its own – through trial and error – how to distinguish cats from other creatures.

In simple terms, the system analyzes the images it receives, searching for the features that make a cat a cat. Every time it makes an incorrect prediction, the neural network weakens the path that led to that mistake. When it makes a correct prediction, that path is reinforced. This process is known as “error back-propagation.”

This type of learning is “bottom-up” and statistical – quite a contrast to the symbolic approach that dominated before. Back then, it was believed that systems had to be explicitly programmed with rules to complete tasks – for example, defining every characteristic of a cat for the program to recognize it.

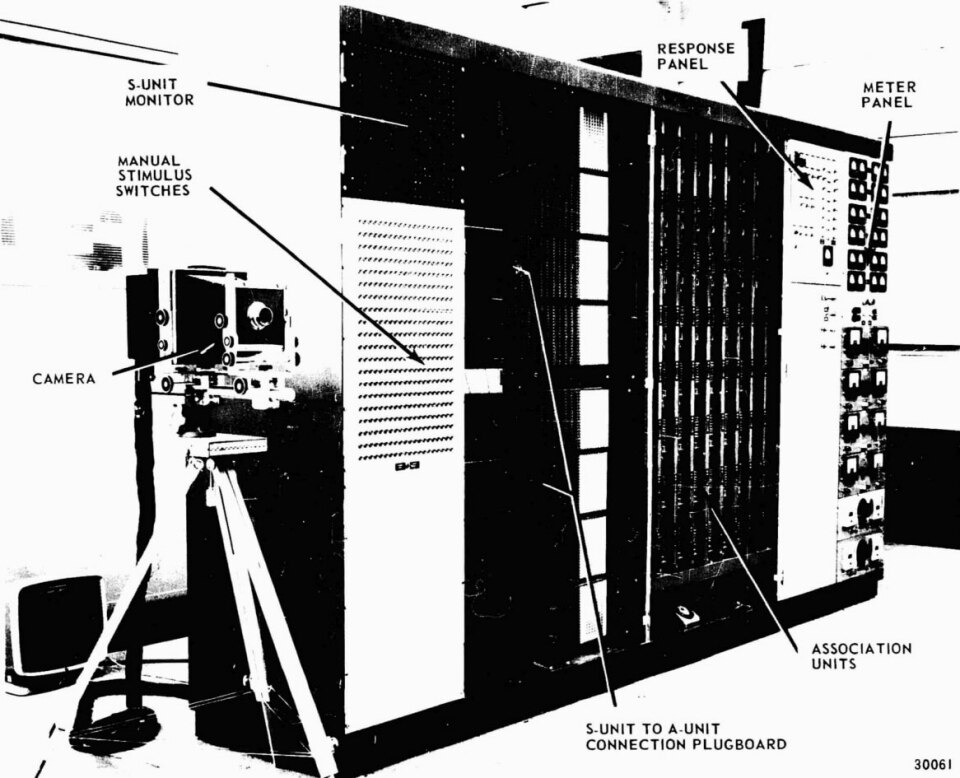

What shocked experts wasn’t just the sudden progress of these systems, but the fact that neural networks had long been considered outdated technology, incapable of realizing their potential. As early as 1958, psychologist Frank Rosenblatt introduced the Mark I Perceptron, the first attempt to create a machine capable of learning directly from data. However, the experiment failed because the neural network was too simplistic, computing power was insufficient, and there wasn’t enough data to train the system.

In 1969, Marvin Minsky, a key advocate of symbolic AI, dismantled the Perceptron mathematically in his essay Perceptrons, co-written with Seymour Papert. This work convinced the academic world to abandon the field. The result was a period known as the “AI winter,” where neural networks were sidelined by the scientific community.

However, not all researchers gave up on the idea. A few, including the “godfathers” of artificial intelligence – Geoff Hinton (supervisor of AlexNet), Yann LeCun, and Yoshua Bengio – continued working on neural networks, almost in secret, believing that this was the best path to achieving the technological holy grail of AI: machines that could learn and perform tasks traditionally associated with human intelligence.

Nell’intelligenza artificiale non c’è intelligenza, ma solo dei calcoli statistici estremamente complessi. Questo, però, non ha impedito al deep learning di cambiare per sempre il mondo in cui viviamo.

The issue wasn’t with the neural networks themselves or the machine learning algorithms. The theory behind the Perceptron wasn’t wrong – it was the lack of technological tools to fully realize its potential that had held it back.

By the 2010s, however, things were changing rapidly. On one hand, the internet had unleashed a flood of data, providing the raw material needed to create the vast databases required to train neural networks. On the other hand, computational power was advancing at an extraordinary pace.

2012 marked a turning point – when machine learning (and later, deep learning, where “deep” refers to adding more layers to neural networks) demonstrated its ability to accomplish feats previously thought impossible for symbolic systems.

This is why 2012 is often referred to as the “big bang of deep learning” and, by extension, the big leap for AI. From that point on, machine learning spread across industries, transforming the world around us. It powered algorithms that recommended what to buy, watch, or listen to. More critically, it powered those that filtered job applications, assessed loan eligibility, and helped doctors spot tumors. No field remained untouched by machine learning, which uncovers hidden correlations in vast data sets and makes highly accurate predictions – often without error.

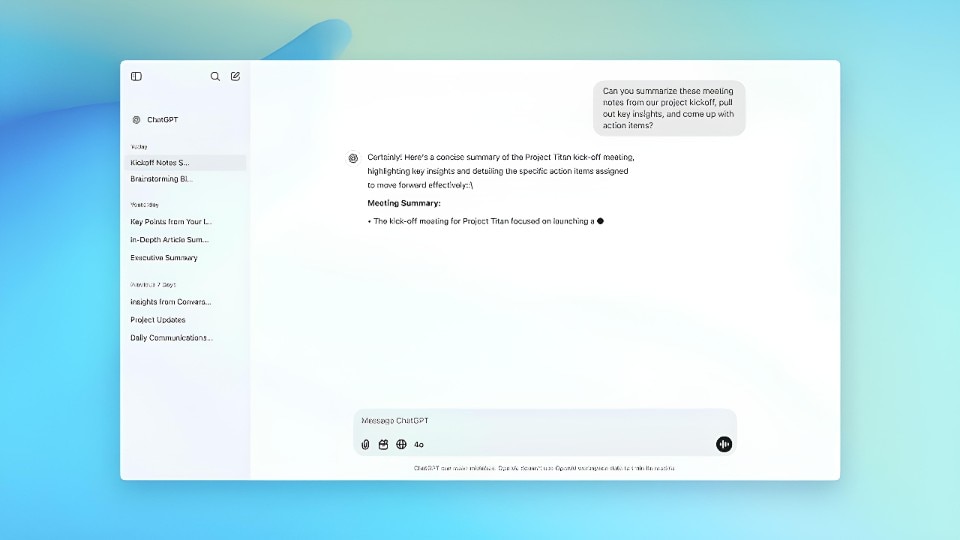

These systems are known as “predictive algorithms,” and in recent years, they’ve been joined by “generative algorithms.” By 2022, large language models (starting with ChatGPT) demonstrated the ability to generate coherent text and respond accurately to questions on virtually any topic. Other generative systems can create images (Midjourney), videos (Sora), and music (Suno), often producing results that are nothing short of astonishing.

Although the end products may differ, the underlying structure remains the same: a deep neural network that learns from data. The key difference is that the output is no longer a simple classification (“This is a picture of a dog”), but a more complex prediction: the next word in a sentence, the missing pixel in an image, or the next note in a melody.

Thus, predictive and generative AI share the same statistical process: they’re programs that identify patterns in data and use them to make statistically plausible predictions. In AI – coined by computer scientist John McCarthy in 1956 – there’s no “true” intelligence, only incredibly complex statistical calculations. But this hasn’t stopped deep learning from changing the world as we know it.