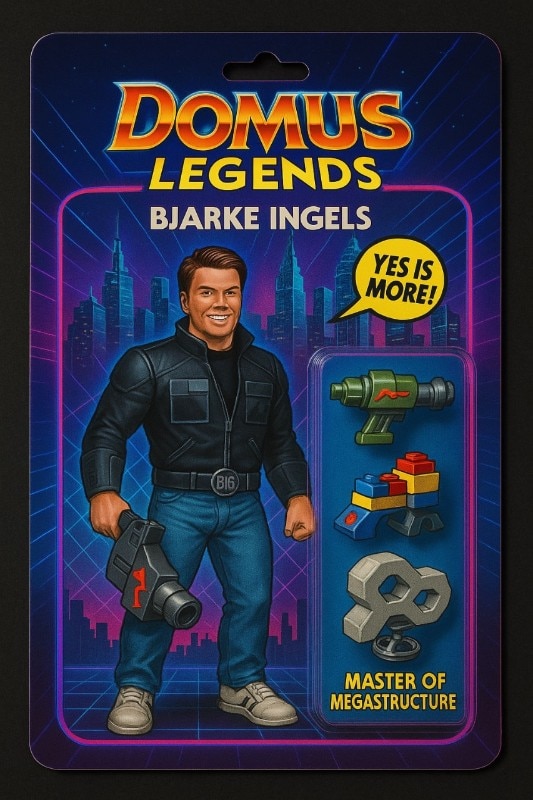

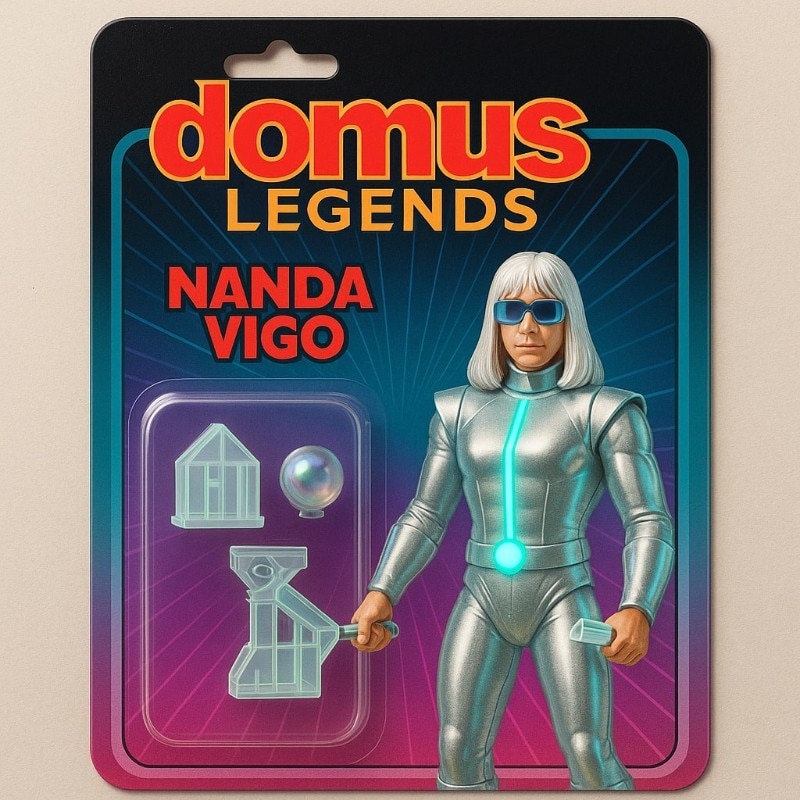

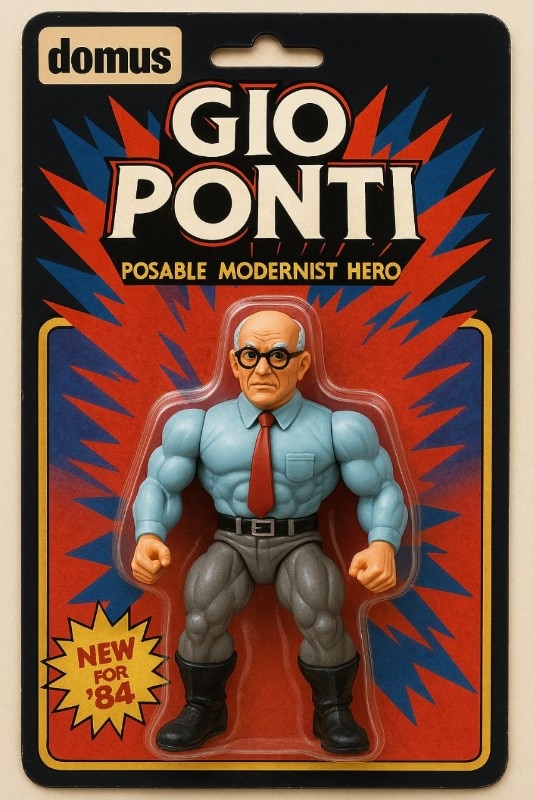

In recent weeks, social feeds have been flooded with personalized action figures generated by artificial intelligence. No longer limited to Barbies or Action Figures, these miniature collectibles now bear the faces of real people – your coworker, your best friend, Taylor Swuft, Timothée Chalamet, or that girl you just scrolled past on TikTok's For You page. They’re presented like real collectible toys, complete with blister packaging and accessories.

Each figure is decked out with identity-defining props: a scarf, a laptop, a stethoscope, a guitar. These objects serve as reductive, tangible emblems of who we are, at least on LinkedIn, in Hollywood, or on SoundCloud.

All it takes is a photo and a few biographical details fed into a ChatGPT prompt, and in minutes, you get a tiny digital “mini-me” ready to be admired, shared, and commented on across Instagram or TikTok, for the small price of your biometric data.

It’s a seemingly nostalgic trend, treated like any other viral fad, yet it hovers somewhere between digital narcissism and an identity game. It reflects both a desire for representation and a drive to – further – convert the self into sharable content. In this strange ritual of turning humans into products, flesh into virtual plastic, individuality into a standardized format, the line between person and persona blurs once more.

The result is an algorithmic mashup of pre-coded visual styles, leading to a uniform aesthetic: despite the diversity of the people portrayed, the figures all look oddly similar.

If we applied Aristotelian logic to this phenomenon, we might get a similar syllogism: all singers have microphones; this action figure has a microphone; therefore, this must be a singer (or wannabe singer). The accessory becomes the essence; the symbol replaces the individual; the representation starts to blur with the represented. This reduction of identity into object-symbols reveals the rigid binary logic that underpins these algorithms: you are what you own, what you do, and the emblem that defines you. And perhaps a deeper truth lurks beneath it all: you want to be seen this way. You want to claim that spot.

But it’s not just about vanity or personal branding. The poses, backdrops, and attributes assigned to these action figures tend to recycle familiar narratives borrowed from mainstream pop culture. The algorithm rarely offers anything genuinely original or aesthetically groundbreaking; it just reshuffles what’s already widely recognized. Just like with AI-generated “Ghibli-style” art, there’s also the looming issue of copyright.

This homogenization brings with it another problem: cultural decontextualization. Myths, traditional iconography, and historical symbols are often repurposed as superficial decorative elements, stripped of their original significance.

And then there’s the environmental cost. According to the International Energy Agency, a single ChatGPT prompt consumes about ten times the energy of a Google search. And when it comes to generating images, it uses even more: researchers at Carnegie Mellon University estimate that creating a single AI-generated image drains two to five liters of water.

Technology is redrawing the lines between reality and representation, between individuality and algorithmic conformity. But the cost of this transformation isn’t just paid in the loss of uniqueness.