A study published by Science on October 24, 2019, shows how an algorithm used by a large number of U.S. hospitals to manage patients and allocate resources, has proven to be discriminating against black people, because of the way it used to assess the need of patients based on average yearly healthcare spending. Black people are also constantly ignored by recognition algorithms on smartphone cameras, or, on the contrary, disproportionately recognized as criminals. A test conducted last year by the American Civil Liberties Union showed how Amazon’s Rekognition, a computer vision solution routinely used by Police force all over the U.S., incorrectly matched 28 Congress members with convicted felons. A disproportionate amount of those congressmen and their - wrongly identified - digital doubles were black. These are just some examples of how bias in technology could already affect black people today.

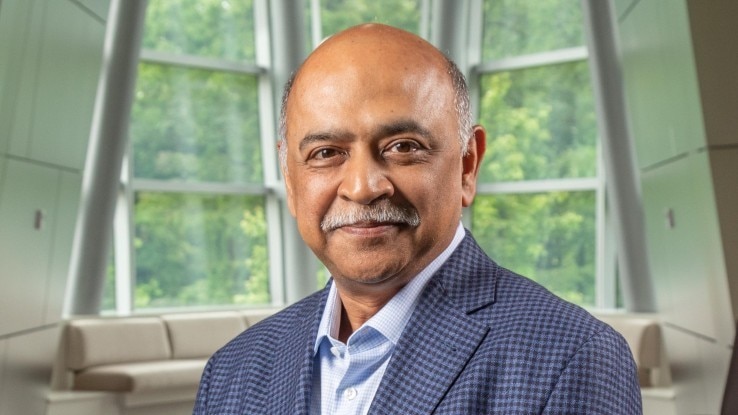

IBM, in the wake of Black Lives Matter protests around the world, is now taking a meaningful stance on the issue by announcing they will not develop any facial recognition solution any longer. The decision was announced in a letter addressed to members of Congress, in which IBM’s CEO Arvind Krishna also explicitly called for reforms to advance racial justice and counter systemic racism, directly supporting the Justice in Policing Act proposal introduce yesterday by top Democratic lawmakers.

“IBM firmly opposes and will not condone uses of any technology, including facial recognition technology offered by other vendors, for mass surveillance, racial profiling, violations of basic human rights and freedoms, or any purpose which is not consistent with our values and Principles of Trust and Transparency,” Krishna wrote.

“We believe now is the time to begin a national dialogue on whether and how facial recognition technology should be employed by domestic law enforcement agencies.”

Krishna’s remarks are in line with IBM’s longtime stance on the matter. Last year the company had already emphasized the issue by publishing a database of face data that was more diverse and inclusive than anything available at that point in time. Machine Learning and Deep Learning algorithms are as good at properly identifying people and faces as the data that they are fed to “learn”. If the data, for whatever reason, is skewed towards enforcing existing societal bias, the outcome of the software will be inevitably biased in the same way. It’s a big, fat design flaw that’s present in most of the facial recognition software used nowadays by police forces (like the aforementioned Rekognition solution that Amazon still insist on selling), that ends up affecting once again the underrepresented and over-policed social strata that were already affected by societal bias in the first place.

This is why IBM’s decision is important. Facial recognition software has never been a moneymaker for the company, but the idea of completely giving up research and development on these AI-based solutions is an unconventional stance that will inevitably send sizable ripples across the whole industry.

Opening Picture from the movie Anon (2018).